Embedded devices seem to be all the rage these days for hobbyists, makers and students in the field of engineering and embedded systems. Devices like the Beaglebone Black, Raspberry Pi and Intel Galileo have reduced the barrier of entry into the world of embedded computing to the lowest we’ve seen in decades, and that trend shows no signs of abating either. Just R500 for example, can get you set with a Raspberry Pi, powered via SD card by any of a great selection of the best distros around; plus these are more than just credit-card sized computers. They’re designed to be extensible via general purpose I/O pins (GPIO) and hardware-attached-on-top boards, meaning their applications are vast. I’m talking anything from a smart medicine cabinet manager, to a web-server hosting, networked, picture-taking all-in-one solution powered by Python (another great reason to learn Python today). Recently though, more advanced uses have arisen for these little silicon wonders, and that’s where nVidia’s Jetson TX1 platform (with the ‘Maxwell’ architecture graphics processing unit (GPU)) comes in.

A brief primer: Neural Networks and x86 Grunt

Artificial Intelligence, including the subfield of computer vision, as well as the implementation of neural networks and their applications have long been the study of computer scientists the world over, and such studies have mostly been confined to full-fat desktop computers running MATLAB or some other huge complex software package, needing the full grunt of an x86 architecture. This is because the tasks are inherently demanding; crunching through vast training datasets, applying neuron transfer functions per hidden layer, computing target matrices, and so on. The end result though, is that computers have the capability to ‘learn’ based on a set of training data, in that they are able to identify something from an external source (say a camera) that is new, but similar to what they’ve been trained with, and can classify this with some degree of probability. This classification, for the user, usually means something intuitive, such as “the user is waving their hands” or “this footprint is a zebra print.”

The Embedded Advantage

So what’s the appeal? Why would you abandon something like Intel’s behemoth new x86-based Skylake Core i7 processor, in favour of a tiny embedded system, when it comes to computing these complex artificial intelligence tasks?

In order to understand the reason, a quick overview for the reason for choosing GPU computing (such as that found in the Jetson TX1) would be useful. Here’s how nVidia, the GPU OEM in question, describes the process.

GPU computing uses the graphics chip, a discrete chip on the platform to the CPU, to accelerate many scientific, engineering and analytic computations, by making sure that the ‘compute’ parts of the running application get offloaded to the GPU to allow the CPU to run the rest of the code. Why does this improve performance? CPUs process tasks differently to GPUs. While a CPU generally has several cores for processing code sequentially (one after the other), GPUs have a massively parallel architecture, with orders of magnitude more cores. These are smaller but more efficient, and designed to handle multiple tasks simultaneously. This sort of architecture makes them ideal for artificial intelligence applications which need to process vast amounts of data to achieve their goal.

Hence nVidia, wanted to address some of these questions with their new ARM-based Jetson TX1 embedded system by addressing the following:

- The power consumption, for starters. Intel’s chip has a power usage (TDP) of 65W, which feels like the fuel consumption of your average Toyota Hi-Ace taxi with a hole in its exhaust compared to the “under 10W” Prius-like equivalent in the Jetson TX1.

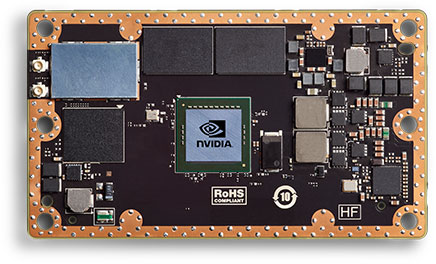

- The footprint is another one. Intel’s socketed desktop CPU’s aren’t large per se, but once you’ve snapped on decent cooling, heatsinks, motherboard attachments and the rest of the desktop, it’s fairly large. At best maybe shoebox size. Again, the Jetson has it beat, considering it’s roughly wallet-sized. This is very important if such platforms are to be designed to end up in smart delivery drones, helper robots and other places where squeezing in a petite dev board will be a boon.

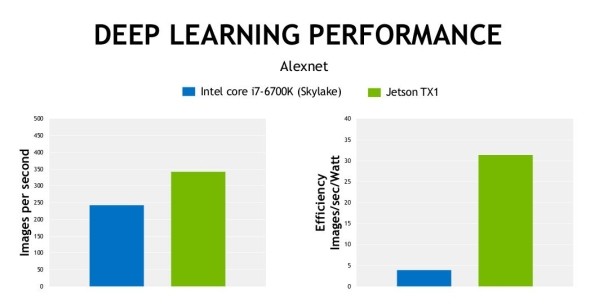

- Performance. Wait, what? x86 beaten by ARM-based devices? Well, not technically. We consider performance-per-watt in this case. nVidia ran some tests, where they trained a neural network to differentiate between objects; pedestrians, cars, motorcycles and cyclists. This sort of comparison is useful if your system will be controlling an driverless car, or figuring out if the delivery drone it’s in is at the right home. In this image classification task, the TX1 outperformed the i7, while drawing far less power. This comes down to the GPU computing discussed previously: the Core i7 6700K tested has a 6-core CPU, whilst the Jetson uses a modest quad-core ARM part. The difference is that the Jetson has a 256-core Maxwell architecture GPU, and this is what gives it the performance boost to match the compute ability of the Intel i7. Check out their test results:

So the Jetson TX1 is very specialised, but it does that task really well, whilst being way smaller, and less power-hungry than it’s x86 cousin. What’s more, it runs Ubuntu, meaning the full community of Linux programs and tools are at your fingertips when you’re coding for the Jetson.

Why Should You Care?

If you’re in Computer Engineering, Embedded systems or similar, the embedded aspect is likely familiar to you. As a Computer Science specialist, the applications of computer vision and artificial intelligence applications in new, smaller form factors will likely excite you. As for everyone else? What makes this a big deal? Well, coding solutions to everyday problems is the continuous goal for those in the field, and it could be for you too! By knowing how to program, you could also be using devices very similar to this to power your own projects which allow computers to learn, to see, to fly (in the case of drones) and ultimately, to improve the world, one neural net at a time.

Interested in the Jetson TX1 from nVidia? Check it out here. Want to make a difference in your world with programming? Get started for free at Hyperion! Comment with your views on this article in the comments section below, and follow Hyperion Hub developments in the future if you’d like to see more articles like these for the South African market.

Author: Matthew de Neef

Date originally published: 04/01/16