Artificial Intelligence (AI) is drastically changing the way in which we live, work, communicate as well as interact with the world around us. One of the rapidly growing fields within AI that is driving exponential technological development across industries is Computer Vision.

Computer Vision aims to program computers to perceive and understand visual information in the same way that humans can. In essence, Computer Vision researchers aim to make computers do what humans do effortlessly with their eyes – see and understand the world. It is this kind of technology that makes autonomous vehicles, robots and image searches and many other advancements in tech possible today.

Understanding the field of Computer Vision

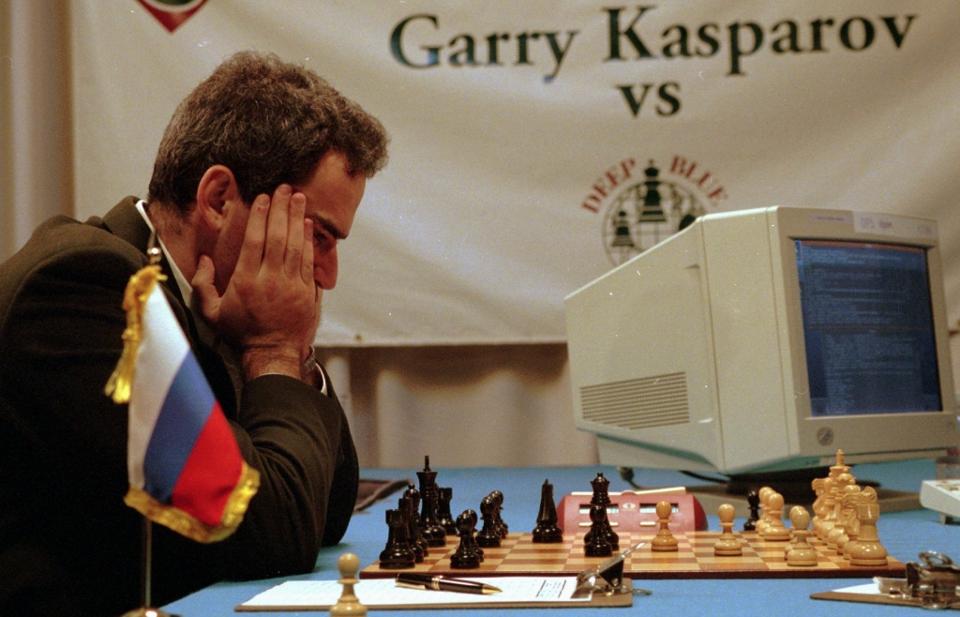

So what exactly makes the field of Computer Vision so complex? If we as humans can perceive information from the world around us, surely we can easily replicate this in machines. However, this is precisely what makes it so difficult. Since we are able to process visual information without thinking, we do not know what algorithms to program into our computers to make them “see” like us. This is different from a problem like playing chess, where there are a set number of rules and pieces to move. Although all the possible moves are very difficult to keep track of for a human, it is trivial for a computer, and as a result, a computer called “Deep Blue” beat the world’s best chess player, Garry Kasparov, as far back as 1996.

Computer Vision is a different problem though – we do not know what the “rules” for seeing and understanding are, and therefore cannot code explicit knowledge into our programs. As a result, many modern Computer Vision techniques rely on analysing patterns arising in large amounts of visual data (such as photographs and videos) using a method known as “Machine Learning.” And through this process, we hope that computers will learn the “rules” to vision by themselves.

There are numerous Computer Vision systems in use today, and many more related technologies due to be released in the near future. Here are a few examples:

Making Sense of all the Visual Data on the Internet

The explosion of images and videos on the internet, via services such as social media and YouTube, is making Computer Vision increasingly relevant as there is a need to develop automated algorithms to organise and understand the vast amount of images and videos out there.

State-of-the-art Computer Vision algorithms are employed every time you search for an image on Google, for example. Initially, the search engine retrieved images based on their textual descriptions. However, Google’s algorithms now analyse the actual pixels of an image to return the best results whilst also filtering out indecent content. Similarly, Facebook has face recognition algorithms which help to identify and tag people in photographs. This facial recognition software is very similar to the face recognition systems that are also employed in airports and security systems.

Enabling Autonomous Robots and Vehicles

Computer Vision algorithms are used extensively in driverless vehicles and autonomous robots, as this technology enables them to perceive and understand their surrounding environment.

One of the key problems in autonomous navigation is Semantic Image Segmentation. To avoid obstacles, robots need to know precisely where objects are. The task of Semantic Segmentation involves labelling every single pixel in an image with its object category to provide this fine-grained knowledge to robots. There’s actually an online demo of such a system, where you can try out your own images.

3D Reconstruction

Another complex problem within the Computer Vision field is that photographs are only a two-dimensional representation of the world. As humans, we are easily able to understand the differing depths of various objects, as well as their relational depth to one another. A prominent branch of active Computer Vision research is devoted to the field of 3D reconstruction – which involves recreating three-dimensional representations of the world (and this is a field of Computer Vision which does not make much use of Machine Learning but rather theory from optics and mathematics). 3D reconstruction algorithms allow robots to build detailed 3D maps of the environment which they are exploring. If you are interested, there is source code for an open-source reconstruction engine.

Helping the partially sighted to see

If we can program computes to see the world around them, then we can use these algorithms to augment those who have impaired vision. These “smart specs” are equipped with cameras and a portable onboard computer and help to enhance the vision of the partially sighted by highlighting salient objects in the environment. These glasses are currently undergoing field trials and will be on sale in the United Kingdom in 2016.

Interested in learning more about the explosive field of AI and computer vision? Our Data Science Bootcamp is a great place to start. Our mentor-led model teaches you job-ready, practical data analysis, programming, and analytics, that you’ll need to start working as a data scientist in as little as 3 months. What’s more, you’ll receive ongoing support in preparing for and finding your first job in our Graduate Program. Enrol for your part-time or full-time online coding bootcamp.

Editors note: this was originally published on 8 November 2017.