Curious about running an operating system from within another operating system? This practice is called local desktop virtualisation, and has plenty of practical uses.

What is local desktop virtualisation?

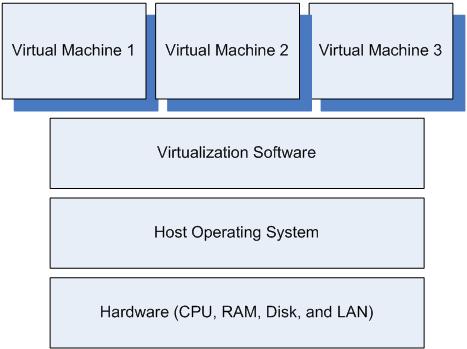

Virtualisation on its own is the practice of separating the logical execution of operating system software from the underlying hardware it runs on. This means that many logically and geographically differing hardware components can be virtualised as a single computer for the software it is running. However, this is often an enterprise focus, so you’ll likely be exposed to local desktop virtualisation instead. The latter is using software to emulate a virtual computer system using local hardware, from within an existing operating system running on that local hardware. The computer the virtualisation software runs on is known as the host, whilst the guest is the “virtual machine”(VM) which runs inside the software on the host.

The VM software itself (referred to as the hypervisor) has the ability to use the hardware running on your host computer and simulate an instance of that hardware as a guest machine. It allows resources such as RAM, CPU cores, USB peripherals, keyboard and mouse input, and more which are part of the host computer to be allocated to an operating system running in a virtual environment. The VM is a container running on the hardware platform of the host computer.

The guest is an entire operating system, but it only “sees” the hardware of the host through the hypervisor that defines what resources are passed through. This means you can emulate other hardware to the VM via the hypervisor than what your PC actually has, if necessary. Both the previously mentioned resources, but also network adapters, USB devices, and shared network folders can be shared between host and guest.

Why is virtualisation useful?

Running VMs can have many benefits. Firstly, compatibility. How often have you not needed another operating system, such as an older version of Windows because some software package won’t work on the newer, shinier iterations? Secondly, security. You can test drive a beta OS, or run a sandbox, isolated from your personal data, without having to switch between OSes. Thirdly, if you’ve had a task which needed the Linux terminal, for example, it’s very convenient to just use a Linux VM. You’ll save yourself the hassle of re-partitioning your storage, setting up a dual-boot system or accidentally overwriting your bootloader. Also, because you can set up plenty of VMs for each use-case, you can keep using your host OS while you use your VM, because the VM is just an application window.

Aside from the usefulness of OS-in-OS, VMs are often used on high-powered high-resource servers, with each one playing a different sort of role i.e one a fileserver VM, another a database VM, and so on.

As expected, running a hypervisor with a VM means sacrificing your host OS’s resources while the VM is running, so you’ll have to have enough RAM, hard disk space, and CPU power to allocate to your VM and make sure your host OS doesn’t choke for lack of resources.

If you’re interested to apply these concepts in practice, check out our tutorial to setting up VirtualBox on Windows and installing Ubuntu as a VM.

Comment with your views on this article in the comments section below, and follow Hyperion Hub developments in the future if you’d like to see more articles like these for the South African market.